AI Is No Longer “Screening” You, It’s Scoring You

If you ask most job seekers how AI affects hiring, the answer is usually the same: “It filters resumes.” That idea made sense years ago. In 2026, it’s outdated. Modern hiring systems don’t just decide whether you pass or fail a single gate. They continuously score you as you move through the pipeline.

This is why the phrase “AI rejection” is misleading. It suggests a single moment where a machine decides you’re out. In reality, today’s systems behave more like scoring engines than binary filters. Your application isn’t judged once. It’s evaluated again and again, with new data added at every step.

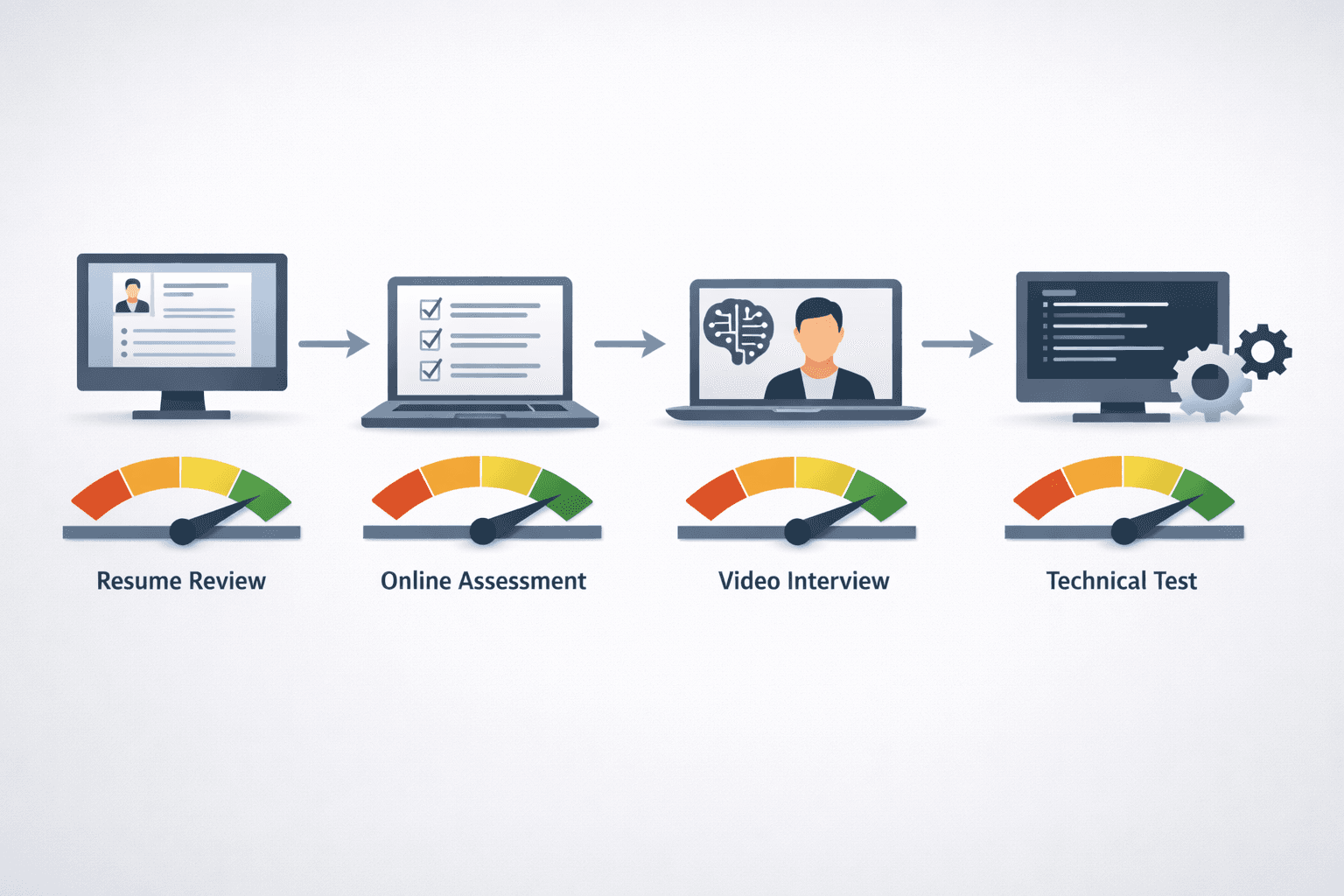

Think of the hiring process as a running total rather than a knockout match. Your resume contributes an initial score. An online assessment adjusts it. A video interview adds more signals. A technical round shifts the balance again. By the time a recruiter looks at your profile, they’re often seeing an aggregated picture shaped by dozens of small inputs, not one catastrophic failure.

This also explains a frustrating but common experience: “I didn’t bomb any interview, but I still didn’t get an offer.” In many cases, nothing went terribly wrong. Instead, your score gradually drifted downward across multiple stages, just enough to place you below other candidates.

A typical pipeline might look like this: resume and profile review, followed by an online assessment, then a video or live interview, and finally a technical or role-specific round. At each point, AI systems quietly observe patterns, compare signals, and update their predictions.

Understanding this shift is crucial. Once you see hiring as a continuous scoring process, the real question changes. It’s no longer “Why did AI reject me?” but “What was AI measuring at each stage?” That’s exactly what we’ll break down next.

What “AI Scoring” Really Means in Modern Hiring Systems

When companies talk about “AI scoring,” it can sound abstract or overly technical. In practice, it’s much simpler — and more practical — than most people expect. Scoring is just how modern hiring systems organize uncertainty. Instead of asking “Is this candidate qualified?”, AI systems in 2026 are asking a different question: “How likely is this candidate to succeed here, compared to others?”

From Rules to Probabilities

Older applicant tracking systems worked like rigid checklists. You either matched the required keywords, years of experience, or degree filters, or you didn’t. Today’s systems have moved away from fixed rules and toward probability-based models. AI no longer tries to prove that you are “qualified.” It estimates the likelihood that you will perform well, pass later stages, and stay in the role. Every signal you generate nudges that probability up or down, even if only slightly.

Scores Are Contextual, Not Absolute

One of the biggest misconceptions about AI scoring is the idea of a universal score. In reality, scores are deeply contextual. The same candidate can rank highly for one role and poorly for another. A profile that looks strong at a startup might score differently at a large enterprise. Even within the same company, your score can shift as you move from resume review to interviews. There is no such thing as a permanently “high-scoring” candidate across all situations.

Humans Still Decide, But AI Sets the Frame

Despite the automation, humans still make final hiring decisions. What AI controls is the frame of attention. It determines who gets reviewed first, who gets more time, and whose profile appears stronger by comparison. This is better understood as ranking and prioritization rather than elimination. Recruiters are choosing from an ordered list, not judging in a vacuum.

In the next section, we’ll look at how these scores are actually shaped at each stage of the hiring pipeline — and what AI is observing along the way.

Where AI Scores You Across the 2026 Hiring Pipeline

One of the biggest misunderstandings about AI in hiring is the idea that scoring happens at a single moment. In reality, there is no single “AI decision point.” Scoring is distributed across the entire hiring pipeline. Each stage generates new signals, and those signals accumulate over time. What matters is not one performance, but the pattern that forms as you move forward.

Below is a simplified view of how AI scoring typically works across a modern 2026 hiring pipeline.

Hiring Stage | What AI Observes | How It’s Scored | Common Candidate Mistakes |

|---|---|---|---|

Resume and profile evaluation | Skills, role alignment, career progression, language clarity | Compared against role-specific benchmarks and past successful profiles | Overloading resumes with keywords, unclear role impact, inconsistent timelines |

Online assessments and timed tests | Accuracy, speed, problem-solving patterns, consistency | Performance normalized against large candidate pools | Rushing answers, ignoring instructions, uneven performance across sections |

Asynchronous or live video interviews | Answer structure, relevance, clarity, pacing, confidence signals | Response quality and coherence weighted by role expectations | Rambling answers, weak structure, missing key points |

Technical interviews and collaborative coding | Problem-solving approach, code readability, explanation quality | Emphasis on process signals, not just final output | Writing correct code without explaining reasoning |

Post-interview signal aggregation | Cross-stage consistency, trend direction, comparative ranking | Scores combined into an overall probability estimate | Assuming one strong round cancels out earlier weak signals |

What’s important to notice here is that no single row in this table is usually fatal on its own. A mediocre resume won’t automatically eliminate you. Neither will a slightly awkward interview answer. AI systems are designed to tolerate noise. What they react to strongly is repetition.

This is why “I did fine in every round” can still end in rejection. If your signals are consistently average, slightly unclear, or just less structured than others, the system doesn’t see a failure. It sees a pattern. Over time, that pattern pushes your overall score lower relative to candidates whose signals trend upward.

Another common issue is mixed signaling. For example, strong technical performance paired with weak communication can confuse scoring models. Humans might interpret this nuance generously. AI systems are more literal. They aggregate what they can measure, not what they can infer.

Understanding this accumulation effect is key. You’re not being judged on isolated moments, but on how your signals behave together across stages. In the next section, we’ll look at where this process often breaks down — and the specific signals AI tends to overvalue or misunderstand.

The Signals AI Overweights (and What It Often Gets Wrong)

By this point, it should be clear that AI scoring isn’t malicious or intentionally unfair. But it is imperfect. Modern hiring systems are built to detect patterns efficiently, which means some signals get amplified while others are quietly ignored. Understanding these blind spots helps explain why capable candidates sometimes feel unseen.

Signals AI Loves Too Much

AI systems strongly favor signals that are easy to measure and compare. Clear structure, well-organized answers, consistent pacing, and relevant keywords all score well because they are predictable and repeatable. When responses follow a logical flow and stay tightly aligned with the question, models can confidently interpret them.

The downside is that this often disadvantages strong candidates who don’t think out loud in a linear way. People who pause, explore ideas verbally, or refine their answer as they speak may come across as less coherent to an algorithm, even if their underlying reasoning is solid. The issue isn’t lack of ability, but a mismatch between how humans naturally communicate and how machines prefer to process language.

Signals AI Still Struggles With

On the other side are signals that remain difficult to quantify. Potential, adaptability, and long-term growth don’t translate neatly into data points. Neither do unconventional career paths or candidates who thrive in ambiguous environments. AI systems tend to favor consistency, which means nonlinear trajectories can look risky rather than promising.

This is especially true for candidates who are “a bit unusual but very strong.” They might have sharp insights, creative problem-solving skills, or deep domain intuition that doesn’t show up clearly in standardized responses. Humans can often recognize this quickly. AI models, however, struggle to reward what they can’t reliably measure.

Because of this gap, some candidates choose tools like Sensei AI to help maintain structure and clarity in real time, ensuring their key points are communicated cleanly as interviews unfold. These tools don’t change who the candidate is, but they can reduce the chance of being misread by automated systems.

Once you understand which signals are overvalued and which are underdetected, the next question becomes practical. If AI scoring works this way, how should candidates adjust their preparation without losing authenticity? That’s what we’ll explore next.

Try Sensei AI for Free

Preparing for AI Scoring Without Sounding Like a Robot

Preparing for AI scoring doesn’t mean changing who you are or memorizing scripted answers. The goal is simpler and more human: reduce the chances that your ideas are misunderstood. When clarity improves, both AI systems and human interviewers can focus on what actually matters — your thinking.

Designing Your Answers for Clarity First

Clear communication consistently outperforms impressive vocabulary. AI systems care far more about whether your answer maps cleanly to the question than how sophisticated your language sounds. Using a cause-and-effect structure helps anchor your response. Start with the situation, explain what you did, and end with the outcome. This result-oriented flow makes it easier for models to identify intent, relevance, and impact without guessing.

Reducing Cognitive Load for Both AI and Humans

Strong answers are easy to follow. When responses are structured, they require less mental effort to process, which benefits both machines and people. Consider the difference between an answer that jumps between ideas and one that clearly frames the problem, walks through the approach, and then summarizes the result. Even when the content is identical, the second version produces cleaner signals because the logic is explicit rather than implied.

Practice With Feedback, Not Just Repetition

Rehearsing answers repeatedly isn’t the same as improving them. Real progress comes from feedback that reveals where your message becomes unclear or overly dense. Some candidates use tools like Sensei AI’s AI Playground to test different ways of expressing the same idea, adjusting structure and emphasis until the logic comes through more cleanly. This kind of practice focuses on communication quality, not memorization.

The key takeaway is that effective preparation is about alignment, not performance. You’re aligning your thinking with how it’s interpreted. That distinction becomes even more important in technical interviews and coding exercises, where scoring logic follows a different set of rules altogether.

Practice with Sensei AI

How AI Evaluates Technical and Coding Interviews in 2026

In technical and coding interviews, AI scoring works a little differently from earlier stages. While correctness still matters, modern systems place significant weight on process signals — how you think, not just what you produce. In 2026, writing code that passes test cases is rarely enough on its own.

AI systems closely observe code readability, naming conventions, and structural clarity. Descriptive variable names, logical function boundaries, and consistent formatting all act as signals of maintainability. These elements suggest how someone might work in a real codebase, where collaboration and long-term clarity matter more than clever shortcuts. Similarly, explaining your reasoning as you go helps models understand intent, not just output.

This is why “writing fast but staying silent” can actually be risky. Speed without explanation often produces ambiguous signals. AI may see a correct solution, but it also sees gaps in communication, uncertainty about intent, or a lack of structured problem-solving. From a scoring perspective, that ambiguity lowers confidence in predicting on-the-job performance.

Remote coding platforms also generate behavioral data beyond the code itself. Pauses, revisions, backtracking, and how candidates respond to hints or constraints all contribute context. These patterns help AI infer how someone handles uncertainty, debugging, and evolving requirements — all common realities in real engineering work.

In this context, some candidates use tools like Sensei AI’s Coding Copilot to help articulate their thought process during technical interviews. The role of such tools isn’t to solve problems automatically, but to assist in expressing logic and structure clearly as challenges unfold.

At the end of the day, even the most advanced scoring systems are trying to answer a simple question: can this person do the job effectively? Technical AI evaluation isn’t about perfection. It’s about predicting how someone will think, communicate, and collaborate once the interview ends.

Try Sensei AI Now!

What This Means for Candidates Going Forward

By now, it should be clear that AI scoring is not a single obstacle to overcome, but a system you move through. From the first resume review to the final interview, hiring decisions in 2026 are shaped by accumulated signals rather than isolated moments. Once you understand that, the process becomes less mysterious and more navigable.

The real advantage isn’t trying to outsmart AI. It’s understanding how it interprets information. When you know that scoring is continuous and comparative, you can focus on reducing misalignment instead of chasing perfection. This shift in mindset alone helps many candidates feel more in control of their search.

It’s also worth reframing the role of AI entirely. These systems aren’t designed to be adversaries. They’re built to organize large volumes of information in a way humans can act on. In that sense, AI is something you communicate with, not something you fight against. Clear signals lead to clearer interpretation.

Hiring hasn’t become colder or more impersonal. It’s become more structured. The candidates who stand out are often the ones who make their thinking easy to follow. That doesn’t mean being loud, polished, or charismatic. It means expressing ideas in a way that others — human or machine — can understand without extra effort.

The good news is that this is a learnable skill. Communication improves with awareness and practice. When clear expression is paired with real ability, the combination remains powerful no matter how advanced hiring systems become.

AI may shape the process, but it doesn’t define your value. Understanding the system simply gives you a better way to show it.

FAQs

What is the 30% rule in AI?

The “30% rule” is an informal way to describe how AI-driven systems often make decisions based on partial but sufficient confidence, rather than complete certainty. In hiring and evaluation contexts, this means early signals — such as initial screening scores, keyword alignment, or early interview performance — can account for a significant portion of how a candidate is perceived. Once that baseline is set, later inputs tend to adjust the score rather than redefine it entirely. The takeaway is that consistency matters more than single standout moments.

What is the AI prediction for 2026?

By 2026, AI is expected to move from experimental support tools into core decision infrastructure across most industries. Instead of isolated use cases, AI will increasingly connect resume screening, assessments, interviews, and performance data into unified systems. This doesn’t remove human judgment, but it changes when and how humans intervene. Decisions will rely more on pattern recognition and comparative scoring, with humans focusing on edge cases and final validation.

How will AI impact jobs in the next 5 years?

AI is less likely to eliminate entire professions than to reshape how roles are defined. Repetitive, rule-based tasks will continue to shrink, while jobs that require judgment, coordination, and communication will expand in scope. Many roles will split into two tracks: one focused on execution with AI assistance, and another focused on oversight, decision-making, and synthesis. Adaptability will become more valuable than any single technical skill.

Is AI pushing us to break the talent pipeline?

Yes — but not by lowering standards. AI exposes inefficiencies in traditional pipelines that relied on linear career paths and slow signaling. Skills develop faster than credentials, and AI systems can surface potential earlier than human networks alone. This puts pressure on outdated hiring models while creating space for non-traditional candidates. The pipeline isn’t breaking; it’s being re-routed around skills, evidence, and demonstrated capability rather than pedigree.

Shin Yang

Shin Yang is a growth strategist at Sensei AI, focusing on SEO optimization, market expansion, and customer support. He uses his expertise in digital marketing to improve visibility and user engagement, helping job seekers make the most of Sensei AI's real-time interview assistance. His work ensures that candidates have a smoother experience navigating the job application process.

Learn More

Tutorial Series: Introducing Our New Chrome Extension Listener

How AI Actually Scores You in 2026 Hiring Pipelines

How to Discreetly Use AI During Live Interviews

Best Excuses for Job Offer Delay (and How to Handle Them Gracefully)

Why “Confidence” Beats “Competence” in Most Job Interviews

Why Global Companies Are Hiring Personality Twins (and How to Stand Out)

The End of the Office Interview: How Culture Fit Is Being Redefined Online

The Hidden Job Market in Discord Servers and Reddit Threads: Where the Real Opportunities Are

Why Some Interviewers Ask Impossible Questions (And How to Handle Them)

Why You Keep Getting Ghosted After Promising Interviews

Sensei AI

hi@senseicopilot.com